Insight in Plain Sight

First Experiments with Photogrammetry

Recently, I have been thinking a lot about SfM methods (Structure from Motion) and how to apply them into a self-driving car setting. While my research was more focused on deep learning based methods, I became more curious about classical methods, since they require a great deal of maths and geometry. Eventually, I wanted to start a project wherein I reconstruct my own room and somehow put it one my homepage. The viewer would then somehow be able to walk inside my room. At least, this was how I fantasized the end result.

First steps with openMVG

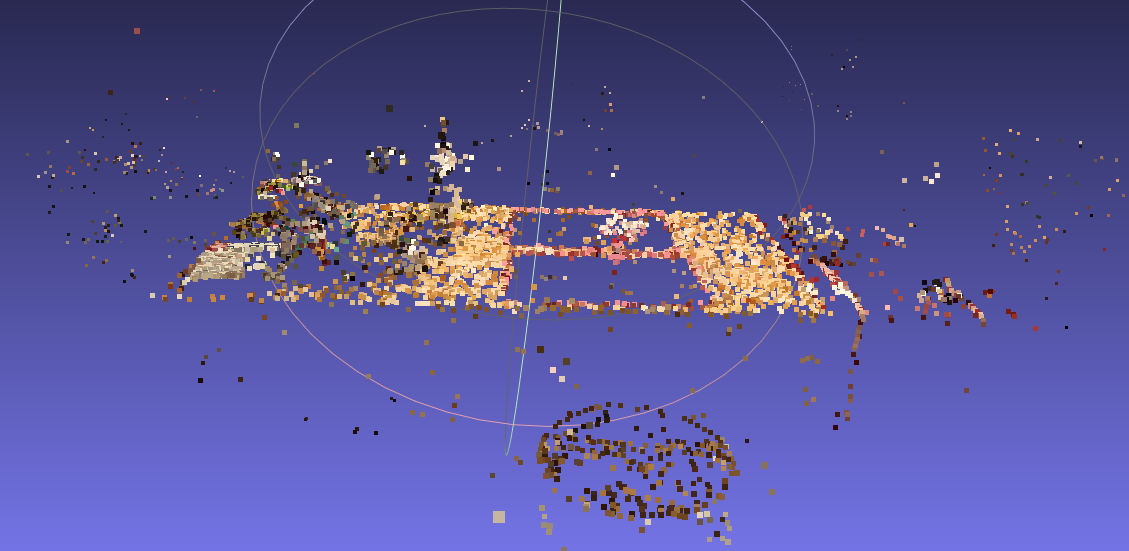

Initially, I even wanted to implement a pipeline myself. A colleague at work therefore recommended openMVG. MVG stands for Multiple View Geometry, and it sounded like something that would fit my needs. I took some images of my table at home and feeded it into the standard pipeline. The results were not what I was expecting. openMVG actually does a great job at creating in creating the point cloud. But the point cloud was too sparse. Homegenous areas on my table left a lot of holes in the reconstruction. There was too much background, that was also reconstructed. Of course, how would the algorithm know what was relevant in the scene and what not. It was not obvious, that the scene was a table. I realized, that point clouds, where not the representation to view the scene. What I was missing and what openMVG does not deliver are meshes and textures.

Switching to Meshroom

After some searching on the internet, I found the the Photogrammetry reddit group. When I saw the 3D-models of the other users, I was mind-blown. They were able to create life-like models of objects in perfect quality, smooth surfaces and no artifacts. I wasn't aware that consumer software was already so advanced and that you did not need require a degree in computer vision to make high quality models. Some users suggested Meshroom as a starting point. It was free and open source, so a perfect choice. Fortunately, my hardware already met the requirements to run Meshroom smoothly, that is a 16Gb of RAM and a NVidia RTX2060 Super with 8Gb of VRAM. What suprised me is, how well-designed and user-friendly Meshroom was. You can just drag and drop images into a window, and with a click of a button the standard pipeline outputs a point cloud, meshes and textured meshes. All of these results can be visualized in a separate window. Their pipeline steps are abstracted as graph nodes and you can easily insert other functions and set up multiple paths. For example, you can setup a drafting path, that skips the most compute intensive tasks. You can have paths that do some processing like reducing the number of vertices in the mesh. You can duplicate some branch of your pipeline and set different parameters for them. And since results are cached, you only need to recalculate, what has changed and what depends on the changes. The only thing that is not that obvious is how blocks fit together, this probably needs some more insight to figure out.